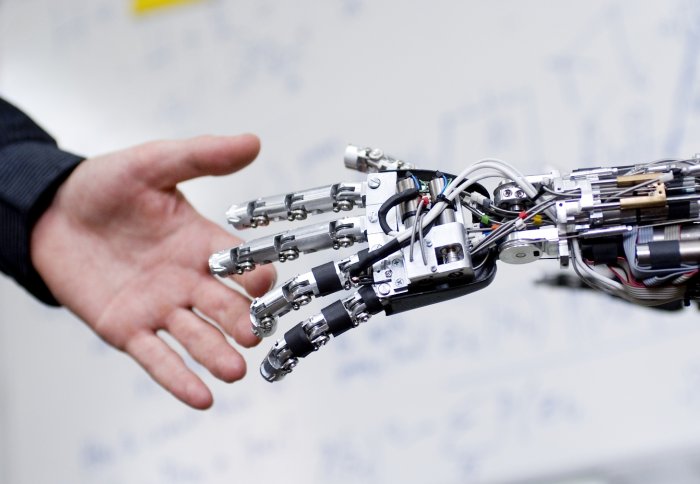

Excitement. Innovation. Passion. These are the true feelings one should have towards Artificial Intelligence (AI); without it, humanity would scarcely be as advanced.

Of course, humans are disinclined to change. They always have been. Be it the Agricultural Revolution, The Industrial Revolution, or The Information Revolution. Yet each of these prior revolutions demonstrate that humans have been fine after them – in fact, much better.

After the Agricultural Revolution, innovative farming methods and improved livestock breeding meant that food production dramatically increased, leading to population booms and superior health for most.

After the Industrial Revolution, humans no longer focussed on menial tasks due to the automation of physical labour, leading to a much-increased standard of living and a boost of the global economy. This pattern of positive impact proclaims the necessity and benefit of change to humanity.

Humans adapt. Lives blossom. Economies nourish.

The AI widely used in the present circumstances is “narrow” AI, meaning that the technology is exceptionally ‘intelligent’ with a specific task; namely, the use of facial recognition for security, or machine learning for YouTube’s recommendation algorithm. In these particular activities, there is no doubt that AI performs considerably greater than any human could, and for that, AI deserves praise.

Unquestionably, there will be certain threats to humankind from AI: job automation, data privacy, socio-economic inequality and a potential arms race. Yet, these threats will certainly not initiate the AI apocalypse! The moment this “narrow” AI is tasked to apply its ‘intelligence’ to another specific task, it goes down like a lead balloon.

Certain scientists believe that the idea of an Artificial General Intelligence (AGI) is becoming more and more plausible. This concept of AGI can be thought of as the precise point where AI advances so much that it will be equally matched to the almighty human intelligence. Ultimately, this hypothetical ability means that AI will learn, and prosper, at any human task. If the AGI further develops, we may reach the technological singularity: where humanity is at risk of eternally losing control of AI, since machines will continuously learn how to re-create superior versions of themselves.

Humans – do not despair! A plethora of research scientists, such as the respected Sir Roger Penrose, are adamant that this technological singularity is unimaginable to achieve for multiple reasons. In our world, we have seen fundamental limits to science – for instance, humans will never be able to accelerate faster than the speed of light. Accordingly, there could be fundamental limits to the recursive, self-developing nature of machines.

More significantly, machines are not sentient beings – with no conscience, no self-awareness, and no mind of their own. Richard Feynman eloquently described a computer as:

“A glorified, high-class, very fast but stupid filing system”

Richard Feynman

validating the fact that machines have no intense desire to “destroy humanity” or replace your job. IBM’s DeepBlue is not planning to wake up tomorrow, conclude that humans are purposeless, and destroy our planet. It has no goal to do so. It is not coded to do this.

The key to always being in control of Artificial Intelligence is AI Regulation, which is the development of public laws to control the use of AI. This is vital if we want to steer away from the singularity described above, since AI is capable of taking over our lives in all aspects: discrimination in hiring, data privacy, financial fraud, weapons and much more. All of these only occur if AI is given enough power. Thus, through AI regulation, we can limit these threats to a minimum.

Indeed, even 50 Nobel Laureates concluded that disease, ignorance, terrorism, climate, population rise and Trump are greater threats to humankind than the ‘innocent’ AI.

References

- Komlos, J. “Thinking about the industrial revolution. Journal of European Economic History”. (1989). 18(1), 191.

- Wirth, Norbert. “Hello marketing, what can artificial intelligence help you with?.” International Journal of Market Research 60.5 (2018): 435-438.

- Built-In Magazine. 6 July, 2021. “Dangerous risks of AI” [Click here]

- Goertzel, Ben. “Artificial general intelligence.” Ed. Cassio Pennachin. Vol. 2. New York: Springer (2007). Preface pages.

- AI Multiple. 6 November, 2021. “When will singularity happen?” [Click here]

- Penrose, Roger. “The Emperor’s New Mind”. Oxford University Press, 1989

- Edward Elgar Publishing. “Competition Law for the Digital Economy” (2019), Page 75

- Psychology Today. 1 June, 2006. “The Myth of Sentient Machines” [Click here]

- Indy100. “How humanity will end, according to Nobel Prize winners” [Click here]

- Science Alert. 11 April, 2021. “AI is not actually an existential threat to humanity” [Click here]